What is a Search Engine Results Page (SERP)?

For businesses and content creators, achieving visibility in search engine results pages (SERPs) is the cornerstone of online success. Yet, behind every high-ranking page lies a critical foundation—crawlability and indexability. These two intertwined factors determine whether search engines can effectively discover your content and include it in their indexes. If your website isn’t easily crawled or indexed by search engine bots, even the most spectacular content won’t reach its audience.

This guide will walk you through the 15 actionable steps to enhance your website’s crawlability and indexability, leading to better rankings and improved visibility in SERPs.

The Importance of Crawlability and Indexability for SEO

Search engines like Google depend on bots (or spiders) to crawl, analyze, and then index content for users to find. Crawlability refers to how easily these bots can navigate your site, while indexability determines whether a page can be added to the search engine’s database. If either of these is hindered, your website risks falling short of generating organic traffic, regardless of your SEO efforts.

Here’s the bottom line: ensuring seamless crawlability and indexability isn’t just beneficial—it’s essential for competitive visibility in SERPs.

Understand the Basics of Web Crawling and Indexing

The Role of Search Engine Bots

Search engines rely on bots to “crawl” websites, following links to discover new pages or updates. Once a page is crawled successfully, it is included in a massive index where search engines categorize and rank it based on relevance and quality. However, a poorly optimized site can confuse or block these bots, leading to missed opportunities for traffic.

Crawlability vs. Indexability

While crawlability is about search engines accessing your website’s content, indexability ensures that those crawled pages are stored and considered for ranking. Even if a search engine crawls your page, certain factors—like incorrect meta tags or disallowed content—can prevent it from being indexed. Optimizing for both is critical.

Analyze Your Website’s Current Crawlability Status

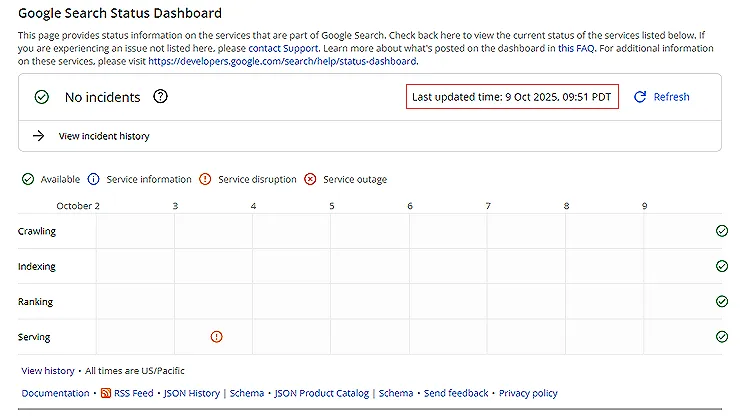

Start by conducting a thorough crawlability audit to identify issues that could hinder bots from analyzing your site. Tools like Google Search Console and Screaming Frog are invaluable for this task. Use them to pinpoint blocked pages, redirects, and other crawl obstacles. Google Search Console, for instance, provides a Coverage Report to highlight any crawling or indexing errors.

Optimize Your Robots.txt File

Guide Crawlers Effectively

Optimizing your robots.txt file is crucial for effective search engine optimization (SEO) as it plays a significant role in guiding search engine crawlers on how to interact with your website. A well-configured robots.txt file can prevent search engines from indexing certain pages, which can be beneficial for hiding duplicate content or private pages that don’t need to appear in search results. To enhance SEO, ensure that your robots.txt file properly blocks unnecessary pages like admin panels, login pages, or any other non-public areas of your site. This helps in conserving your crawl budget, a critical factor when you have a large website with numerous pages.

When optimizing your robots.txt file, it’s vital to keep it simple and error-free to avoid accidentally blocking important pages from being indexed. Use Google’s Search Console to test and validate your robots.txt file. This tool can help identify potential issues and suggest improvements. Additionally, ensure that your sitemap is included in the file to guide crawlers more efficiently through your site’s structure. By strategically managing which parts of your site are accessible to search engines, you can improve the visibility of essential pages and boost overall site performance in search engine rankings.

Finally, regularly audit and update your robots.txt file to align with any changes in your website structure or SEO strategy. As your website grows, so does the complexity of managing its content; hence regular reviews are necessary to maintain optimal performance. Staying informed of the latest SEO trends and best practices can help you make informed decisions regarding which sections of your site should be accessible to search engines. Properly optimizing your robots.txt file not only enhances user experience by directing traffic to the right pages but also strengthens your site’s SEO strategy by ensuring search engines focus on the most valuable content.

Your robots.txt file acts as a set of instructions for bots. It decides which pages they can access and which they should avoid. A well-configured robots.txt ensures efficient crawling by directing bots to your most valuable pages.

However, be cautious! Overrestrictive rules in your file can unintentionally block critical pages from being crawled. For example, a single typo could prevent bots from accessing your entire site—a mistake that can tank your rankings in SERPs.

Content marketing is about delivering the right content to the right people at the right time.

Check XML Sitemaps

Highlight the Importance of XML Sitemaps

An XML sitemap serves as a blueprint for search engines, guiding them directly to your site’s most important pages. It provides clear pathways for search engine bots, ensuring they can efficiently navigate your site’s structure. Without a well-structured sitemap, there’s a risk that bots may overlook key content, which can negatively impact your site’s visibility in search results. By including all relevant URLs in your sitemap, you help search engines discover and index your content more effectively, leading to improved rankings and increased organic traffic.

To fully leverage the benefits of an XML sitemap, it is crucial to ensure that it is up-to-date and properly structured. Regularly revising your sitemap allows you to reflect any changes made to your website, such as new page additions or removed content. Submitting your XML sitemap through tools like Google Search Console is a vital step in this process. Doing so not only facilitates easier access for search engines but also enhances crawlability and indexability by helping bots prioritize your content based on its relevance and importance. A well-maintained sitemap can significantly improve how search engines recognize your site’s hierarchy, ultimately leading to better indexing and higher chances of appearing prominently in search results.

Furthermore, an optimized XML sitemap can provide valuable insights into the performance of your website. By monitoring how search engines interact with the pages listed in your sitemap, you can identify potential issues—like pages that are not being indexed or receiving little traffic. These insights can inform your broader SEO strategy, allowing you to make data-driven decisions about content creation and optimization. In summary, investing time in creating and maintaining an effective XML sitemap is a key component of a successful SEO strategy, as it not only improves visibility but also enhances user experience by guiding visitors to the most important areas of your site.

An XML sitemap serves as a blueprint for search engines, guiding them directly to your site’s most important pages. Without a sitemap, bots may overlook key content.

Ensure your sitemap is up-to-date, properly structured, and submitted via tools like Google Search Console. This step improves both crawlability and indexability by helping bots prioritize your content.

Fix Broken Links and Redirect Errors

The Cost of Broken Links

Broken links or faulty redirects like 404 errors act as dead ends for bots, disrupting their attempts to crawl your site. These issues can also frustrate users, negatively influencing your overall SEO performance.

Tools like Screaming Frog can identify broken links and unnecessary redirects. Fix these promptly to create a smoother crawling experience.

Ensure Mobile-Friendliness

With Google’s mobile-first indexing, ensuring a mobile-friendly website is no longer optional. A poorly responsive site diminishes your crawlability and indexability, as search engines prioritize user experience heavily in their rankings.

Test your site’s mobile performance using Google’s Mobile-Friendly Test tool. Focus on creating layouts that adapt seamlessly to mobile screens by optimizing images, simplifying navigation, and improving load times.

Improve Page Loading Speed

Search engine bots crawl efficiently-loading pages faster and give preference to speed-optimized sites in their index rankings. Slow loading isn’t just a user experience setback; it limits the number of pages that bots can crawl during their visit, depriving your site of full exposure.

Use platforms like GTmetrix and PageSpeed Insights to measure your website’s speed. Speed up load times by compressing images, enabling browser caching, and minimizing CSS or JavaScript files.

Use a Clear and Logical URL Structure

URLs provide valuable context for both users and search engines. A cluttered, complex URL structure not only confuses crawlers but can also lower user trust.

Guidelines for SEO-Friendly URLs

- Use descriptive keywords.

- Avoid unnecessary parameters.

- Separate words with hyphens rather than underscores.

A straightforward URL, like example.com/seo-tips, is easier to index than a cryptic one like example.com/p=12345.

Check Your Website’s Internal Linking

Internal linking is one of the simplest yet most impactful crawlability strategies. It helps bots discover new pages while distributing link equity across your site.

Best Practices

- Link strategically to deeper content.

- Use descriptive anchor text.

- Avoid excessive linking from one page, which can appear spammy.

Avoid Duplicate Content

Duplicate content confuses crawlers and can lead to index-related issues, like competing pages that dilute your SEO equity.

Solutions

- Use canonical tags to specify the preferred URL for duplicated content.

- Consolidate similar pages with 301 redirects.

Focus on Quality Content

Exceptional content encourages bots to crawl your site more frequently and index your pages higher in SERPs. Aim for unique, informative, and keyword-optimized content that aligns with user intent.

A personal tip: Write content that answers specific search queries. For example, an article optimized for “SEO tips for small businesses” is far more indexable than a generic one.

Enable HTTPS for Security

Search engines heavily prioritize secure sites. In fact, a lack of HTTPS may prevent bots from crawling or indexing certain pages due to security concerns.

How to Transition

- Install an SSL certificate.

- Redirect HTTP to HTTPS.

- Update your Google Search Console settings.

Remove Orphan Pages

Orphan pages are those without any internal links pointing to them. Bots cannot easily discover or crawl these pages.

Solution

Identify and link orphan pages using tools like Screaming Frog. Connect them to related pages within your site architecture.

Monitor and Optimize Regularly

Crawlability and indexability aren’t set-and-forget processes. Use Google Search Console regularly to detect and resolve crawl errors while keeping a close eye on algorithm updates.

Pro Tip

Set up automated alerts in your SEO tools to address issues as soon as they arise. Trends shift quickly, so staying proactive is key.

To Summarize

Crawlability and indexability form the foundation for strong SEO. By following the 15 steps outlined above—ranging from proper URL structuring to addressing broken links—you can create a site that search engine bots love to crawl and index.

For long-term results, continuously monitor updates and fine-tune your efforts. By prioritizing these factors, you’ll unlock your website’s full potential in SERPs, driving organic traffic and achieving your SEO goals.